A significant growth area in the global eyewear market is the direct-to-consumer model, where advances in technology have made it possible to offer high-quality eyeglasses tailored to a customer’s specific needs and preferences, shipped within five business days.

Zenni, an online eyeglass retailer, sells 6 million pairs annually in the United States. Now in its 20th year, the online-only eyewear retailer has surpassed 50 million pairs of glasses sold.

Zenni packages and ships about 3 million orders per year from its Novato warehouse in California. For years, workers have manually placed each pair of glasses into a clamshell case and then inserted the case into a tabletop mechanical bagging machine that generates a labeled polybag, ready for shipment (Figure 1). This process involved five manual steps and was prone to error. If just one item was scanned out of sync with the product and ended up in the wrong polybag, it could result in several unhappy customers.

Zenni was by no means new to automation. Its manufacturing operation is completely automated. But, as is typical in logistics operations, the task of automating the work of a dexterous human just seemed too complicated. In 2022, the team at Osaro met the Zenni operations leaders to size up the problem and make recommendations to boost throughput and reduce the messed-up order (MUO) rate.

After evaluating Zenni’s existing manual process, the workflow was defined:

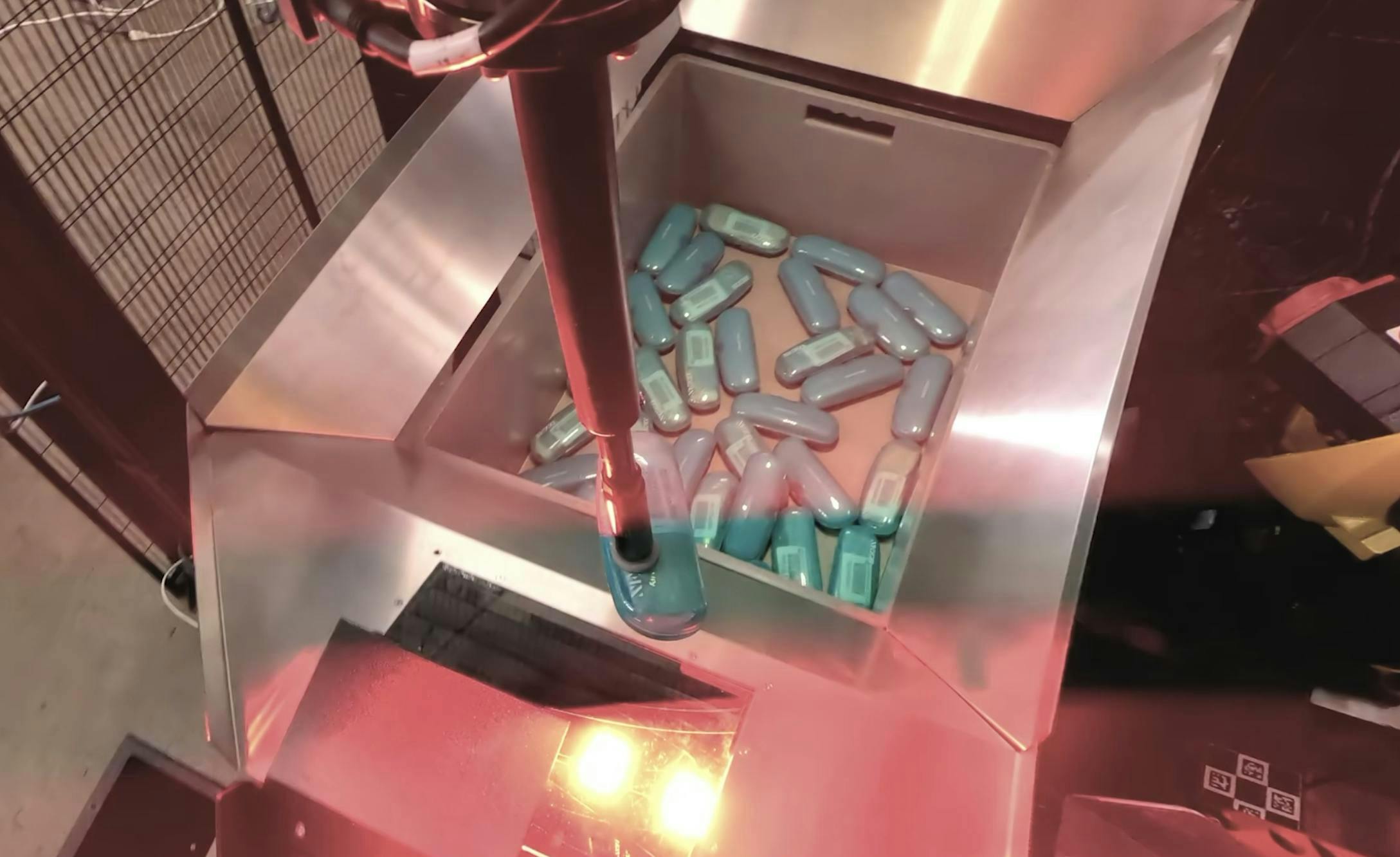

- Identify an eyeglass case from among several hundred identical cases in the shipping container.

- Grasp and pick one case without obscuring the barcode.

- Scan the barcode through the translucent case to identify the order number.

- Send that information to the warehouse management software to trigger printing the order’s polybag.

- Place the case in the chute of the bagging machine.

- Verify the case was successfully deposited into the polybag.

The functional systems involved are:

- a six-axis robotic arm to pick up each case and deposit it into a polybag

- computer-vision and artificial-intelligence (AI) systems to control the robot and pick up each case without obscuring the order barcode

- sensors and lighting to scan the barcode inside each eyeglass case

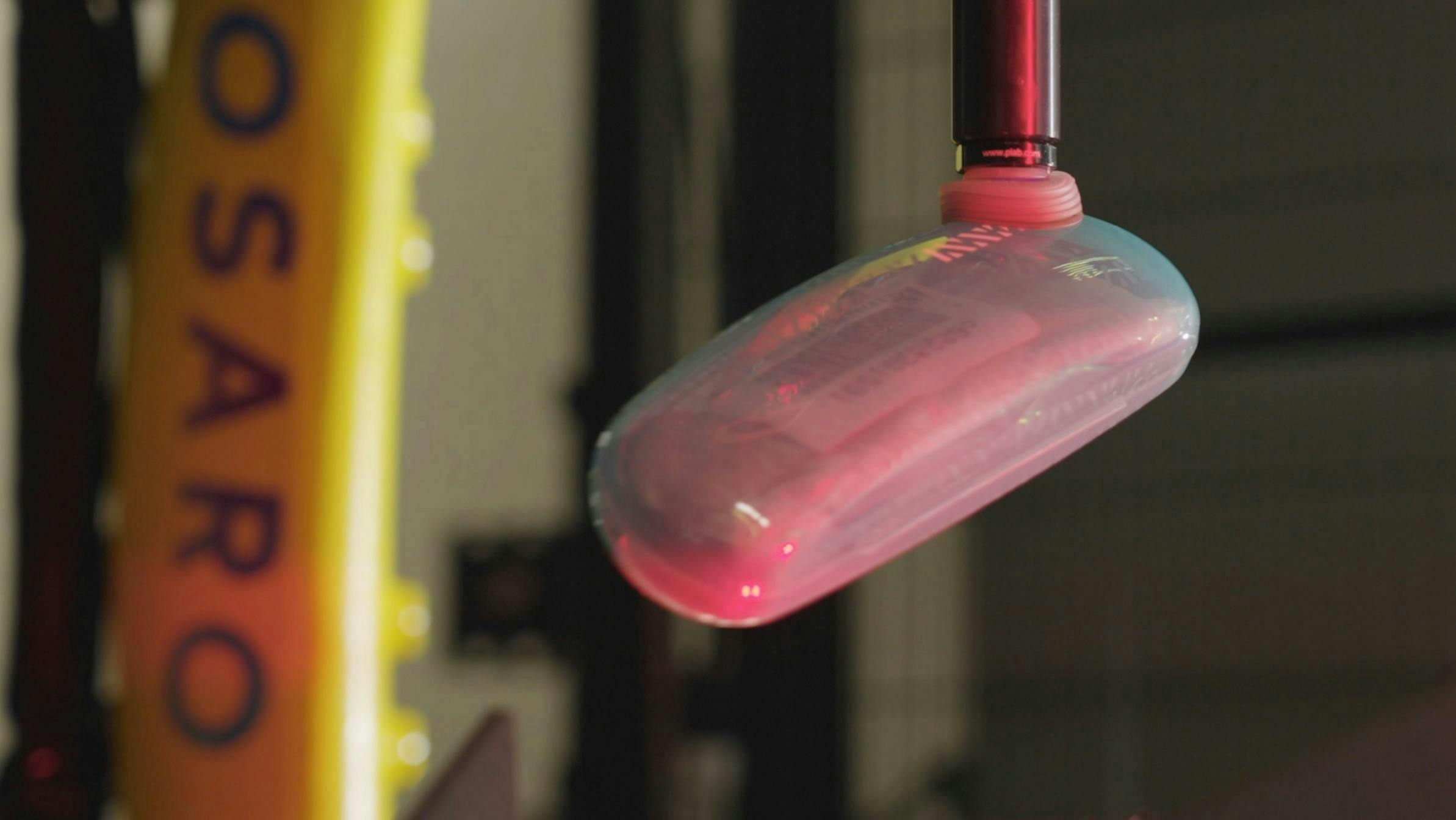

- a custom end-effector that uses proprietary technology (Figure 2).

For the final delivered system, the team worked with a cast of different suppliers that provided a variety of components including cameras, lights, sensors, scanners and the bagging system.

Functional parameters

The target was to surpass the existing manual throughput metric of 300 units per hour and eliminate the errors inherent with the manual system. With humans working on the line, the MUO rate was 20 per 100,000 orders.

The first challenge was to avoid obscuring the barcode during grasp. To ensure the camera could see the barcode, it had to be ensured that the robot grasped the case without obscuring the barcode.

Hundreds of eyeglass cases are randomly oriented in a large Gaylord shipping container; typically, the AI software identifies the target object and then calculates a grasp point that optimizes for center of gravity. But, sometimes, the robot’s gripper obscured the barcode.

To locate individual eyeglass cases in the shipping container, a low-cost overhead 3D camera was used to capture a pair of RGB and depth images. From the images the software and machine learning determines the best pick point and approach angle that will result in a successful case pick, taking into account past successful and failed picks and the inherent noise in the RGB and depth information.

Generally, in similar situations, the system is trained to calculate an object’s center of gravity and pick it up accordingly. But here the system needed training to grasp the case while minimizing the possibility of obscuring the barcode. Multiple layered models were trained to ensure the robot consistently selected the optimum grasp point.

Lastly, if the system determined that the barcode was obscured and could not be read, we routed that case into a separate bin for examination and reintroduction into the supply bin.

The reflective and semi-opaque properties of the blue cases frequently prevented the barcode scanner from reading the barcode.

To ensure the scanner could resolve the barcode, we experimented with numerous combinations of light intensities, wavelengths, and scanners, finally arriving at a specially tuned illumination.

Next, we experimented with different case designs and barcode sizes to ensure the scanner could consistently capture the barcode.

Underpinning these optimizations was the need to balance performance and speed against cycle time and cell cost—the need to spin the object so that the barcode scanner could successfully read the barcode, while at the same time minimizing the hardware costs of lighting and cameras.

The third challenge was to ensure a successful placement into the autobagger. With the eyeglass case now correctly identified and its label ready for printing, the robot must now position the case on the chute that channels the case into the autobagger, where a pre-labelled polybag awaits it.

Generally, how an object will behave when dropped onto a sloped surface varies enormously and is hard to predict; this step required careful orientation of the case before releasing it to ensure it slid smoothly into the autobagger. When we got this wrong, the case would get stuck on the chute, causing a blockage.

Due to the numerous variables at play, simulating this entire operation as a digital twin proved impractical.

Instead, we had to work it out through experimentation, using trial and error to systematically tune the orientation of the case. This involved object modeling, so we could consistently ascertain the multi-axis alignment of each eyeglass case in relation to the chute.

After successfully picking up each case, the system scanned it from different angles to understand its orientation.

As the system positioned the case for drop onto the chute, we optimized its orientation above the chute. The system was trained to position each case with its longest axis aligned with the downward axis of the chute.

We also implemented a series of light beams and sensors so the system could detect blockages on the chute and receive confirmation that each case, along with its paperwork, had been successfully deposited into its uniquely labeled polybag, ready for shipment.

Project results

The robotics system has streamlined what was formerly a five-step process to just two steps. The result? A reduction in errors, approaching close to zero.

The final production system has demonstrated a 50% throughput increase and enables two people working with the robot to perform work that previously required three people. With robots handling a repetitive task, we were able to cut the number of steps needed to process a pair of eyeglasses for shipping from five steps to two steps.

Each robotic system can pick, scan, bag and label up to 410 eyeglasses per hour. The average pace can hover closer to 350 per hour when downtime to replenish bags or maintain the system is factored in, but the system’s machine learning is also expected to continuously improve performance over time.

The new robotics system has also reduced the MUO rate from 20 per 100,000 orders to just 2.5 per 100,000 orders. Zenni’s overall increase in productivity was due not only to fast, consistent throughput by the robots; it now takes fewer people to process shipments, which means Zenni can assign team members to other tasks such as unpacking boxes or processing returns.

The robots enable Zenni to cope when seasonal surges spike to as many as 450,000 units in a single month. They don’t need breaks and can work 24x7. And it’s much easier for Zenni to recruit temporary workers when needed because it is much easier to train temps on the robots than the manual process.

Teammates who work in the Zenni facility like it, too. “Managing the robot is easier and better than stuffing packages,” says Minjie Hu, who just completed training as a robot operator. “Learning new skills is better.”

Those that used to spend their workdays picking up eyeglass cases and putting them in bags are now monitoring the robots. It’s much less tedious work and offers them new opportunities to acquire technical skills and expand their career horizons.